ARIA-AT Automation Launch

This post first appeared on the Bocoup blog

Automation is likely never going to go out of style. As a people, we’re constantly looking for ways to automate our lives and work in order to make the systems we rely on more efficient and reliable, and the ARIA-AT app is no different. We’ve added automation to the ARIA-AT App allowing test administrators to automatically collect test responses for test runs.

Why Do We Need Automation?

The ARIA-AT app relied on a group of test admins to manually run test plans and assign verdicts, even if the test plan run featured an assistive technology and browser combination that had been run before, produced the same results as the previous run, and thereby having the same verdict as the previous run. This eats into testers’ time, meaning they have less time to focus on test plans that are a new AT-browser combo or that are testing new language. The introduction of automation removes the need for testing AT-Browser combinations that have been tested in the past, it allows the ARIA group to start testing new ARIA patterns and move quicker towards having a 100% test coverage for existing ARIA patterns.

The implementation of automation in the ARIA-AT app also highlights the capabilities of the AT-Driver specification. The AT-Driver protocol is the foundation of automation within the app and serves as a real-world example of the protocol in action.

How Does Automation Work?

The automation system is called the Response Collector within the context of the app and wider system. “AT responses,” and what the Response Collector collects, is what we use to describe the observable behavior of the AT during a test, i.e., the words vocalised by a screen reader given the test input. The Response Collector only collects responses of test plan runs that have been run at least once before by a human, and thus, they will have associated test results, which we refer to as historic test results in this context. The app describes the state of the automation system in terms of non-human users (e.g., a user named ‘NVDA Bot’) whose actions can be modeled like those of a human tester. So NVDA Bot can have test plans assigned to it, and a test admin can then cancel, rerun, or view NVDA Bot’s logs. A test admin can also reassign a test plan run to a human at any time; humans are still a key part of this process.

The Process

- A test admin will assign a test plan run to the Response Collector Bot and specify an AT-Browser combo.

- The Response Collector Bot will create a new test in the associated test plan.

- If a test plan doesn’t have any historic test results, the Response Collector Bot will set the test plan’s status to “skipped” (which cannot be undone) since there aren’t any results to make a comparison with.

- For test plans that do have historic test results, the test plan run progresses as expected, and once the run is complete, the Response Collector Bot compares the current results with the historic results and copies the verdict of the historic test results if there’s a match.

- If there isn’t a match, the test plan run is flagged as having a conflict and is discussed in the ARIA-AT community group.

What’s Happening Technically?

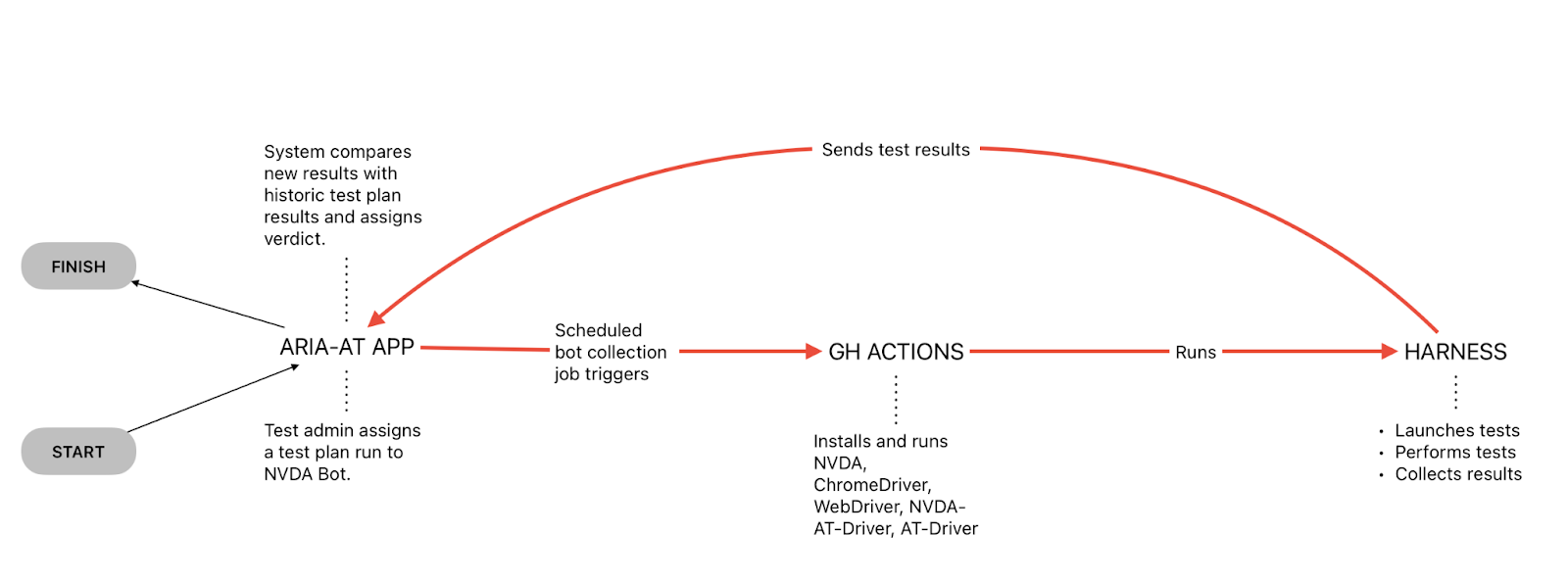

There are three main systems working collaboratively in order to get automation to work.

- ARIA-AT App The ARIA-AT app is the interface where test admins can trigger the response collector bot to run an automated test plan. Here, test admins assign a test to the response collector bot, triggering a GitHub Action.

- GitHub Actions GitHub Actions runs in a virtual environment where a number of dependencies are installed, most importantly the NVDA implementation of AT-Driver. Once all dependencies are installed, the automation harness is initiated.

- Automation Harness Once these dependencies are installed, the test plan run is launched and performed, and results are collected and sent back to the ARIA-AT app.

- ARIA-AT App Once the test results are sent back to the app, the system performs a check against the historic test results of the test plan run and assigns a verdict depending on whether the results match or not.

What’s Next?

While we’re pleased to have launched ARIA-AT automation, this version is the MVP. We’re continually working on improvements and new features for the system. We’ve begun talking to JAWS implementers about implementing their own version of AT-Driver so that we can run automated tests for another popular screen-reader. We’re also keen on making the system more robust and are exploring other cloud computing environments. Finally, we understand the need to make sure websites are accessible through automated testing and are developing tools that empower web developers to leverage our work to test their own applications.

How Can You Get Involved?

ARIA-AT Automation and AT-Driver are collaborative efforts between those invested in the Web and making the Web accessible and interoperable. To be a part of these conversations, you can join the ARIA community group, which is open to all, or the ARIA automation task force, which is open to W3C members. If you’d like to understand more about AT-Driver and get involved in either implementing the protocol or understanding its design, we discuss it further in the Browser Tools and Testing working group, which is open to W3C members.